Welcome!#

The Atlas IP67 ATP200S camera is a GigE Vision and GenICam compliant camera capable of over 600 MB/s data transfer rates (5Gbps), allowing for high resolutions and frame rates over standard copper Ethernet cables up to 100 meters. It features robust M8 IO connector, M12 interface connector with PoE and Active Sensor Alignment for superior optical performance. Its dust-proof and water-resistant camera case and lens tube makes the Atlas IP67 camera perfect for industrial applications such as factory automation and inspection, autonomous robotics, logistics, food and beverage, medical, biometrics and more.

Safety and Precautions#

Follow these guidelines carefully before using your ATP200S.

Definitions and Symbols#

Below are some warning, safety, and/or tips icons used in this document.

The Warning icon indicates a potentially hazardous situation. If not avoided, the situation can result in damage to the product.

The ESD icon indicates a situation involving electrostatic discharge. If not avoided, the situation can result in damage to the product.

The Help icon indicates important instructions and steps to follow.

The Light Bulb icon indicates useful hints for understanding the operation of the camera.

The Computer icon represents useful resources found outside of this documentation.

General Safety Notices#

Powering the Camera

- The camera may not work, may be damaged, or may exhibit unintended behavior if powered outside of the specified power range.

- When using Power over Ethernet, the power supply must comply with the IEEE 802.3af standard.

- When using the GPIO, the supplied power must be within the stated voltage range.

- See the Power section for further information.

Operating Temperature

- The camera may not work, may be damaged, or may exhibit unintended behavior if operated outside of the specified temperature range.

- See the Temperature section for further information.

Electrostatic Discharge

- Ensure proper precautions are implement to prevent damage from an electrostatic discharge.

Image Quality

- Dust or fingerprints on the sensor may result in a loss of image quality.

- Work in a clean and dust-free environment.

- Attach the dust cap to the camera when a lens is not mounted.

- Use only compressed ionized air or an optics cleaner to clean the surface of the sensor window.

Contact Us#

Think LUCID. Go LUCID.

Contact Sales

https://thinklucid.com/contact-us/

Contact Support

https://thinklucid.com/support/

Installing the Camera Hardware#

Mounting#

The camera is equipped with six M4 mounting holes. The camera front contains one pair of M4 holes on each side. An additional pair of M4 holes are found at the back of the bottom side.

Connector#

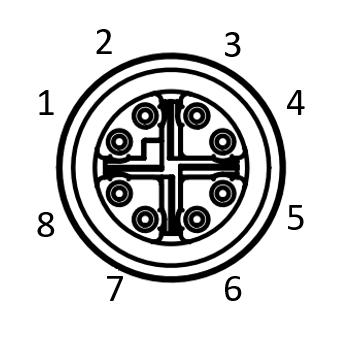

The ATP200S camera users x-coded 8pin M12 connector with the following pin mapping as seen from the camera rear view.

| Pin Number (Camera Side) | Pin Description |

|---|---|

| 1 | BI_DA+ |

| 2 | BI_DA- |

| 3 | BI_DB+ |

| 4 | BI_DB- |

| 5 | BI_DD+ |

| 6 | BI_DD- |

| 7 | BI_DC- |

| 8 | BI_DC+ |

GigE Cable#

- The ATP200S uses x-coded 8 position M12 connector (compliant with IEC 61076-2-109 standard) for Ethernet communication.

- For the best performance, a shielded Ethernet Cat5e or higher should be used. STP shielding is recommended for minimal electromagnetic interference in environments with harsh EMI conditions.

- An unshielded or lower grade/quality Ethernet cable may result in loss of camera connection and/or lost and inconsistent image data.

- The maximum cable length from camera to host with no switch or repeater in between is 100 meters.

- LUCID Vision Labs recommends using qualified Ethernet cables from our web store.

GPIO Cable#

The ATP200S is equipped with an 8-pin General Purpose Input/Output (GPIO) connector at the back.

- When using a shielded GPIO cable in an industrial setting care must be taken to prevent ground loops from forming between Camera chassis, Ethernet PoE power sourcing equipment, IT infrastructure, and devices connected to GPIO.

- The GPIO cable can be shielded if terminated properly. With improper GPIO shield termination scheme, it can cause substantial EMC emissions and immunity issues, induce noise, and cause damage to the camera or equipment attached to the GPIO cable. Using a shielded GPIO cable requires careful consideration of the system level EMC when shielded Ethernet cables and PoE power are used.

- Only use shielded GPIO cables when powering the camera through GPIO, and paying attention to the power supply earth connection, and to the Ethernet shielding termination – ideally AC-coupling the Ethernet shield at the host chassis, or using UTP cable.

- Any GPIO cable with M8 connector compliant with IEC 61076-2-104 will work. An example connector that mates with ATP200S is Phoenix Contact Part Number 1424237.

- Recommended wire thickness is AWG26 or AWG28.

- LUCID Vision Labs recommends using qualified GPIO cables from our web store.

Lens#

C mount lens#

C mount lenses can be used on a C mount camera. According to standard, the C mount flange back distance is 17.53 mm.

Note about using a heavy lens with the camera.

Mounting a heavy and long lens may cause damage to the camera board. If the lens is considerably heavier than the camera, the lens’ weight may exert significant force on the lens mount attached to the camera’s board causing unexpected damage to the board and soldered components. If a heavy lens is necessary for the production environment, it is recommended to use the lens as a mounting point rather than the camera to avoid damage to the camera.

IP67 Rating#

This sections describes the parts required to achieve the IP67 rating for ATP200S cameras.

IP67 Cables#

Use IP67-rated cables with your ATP200S unit to keep dust and water out of the connection ports.

Custom cables must be qualified by the integrator to maintain the IP67 rating.

Find IP67-rated GigE and GPIO cables in the Cables section of the Lucid web store.

GPIO Plug#

When the GPIO port on the ATP200S is not in use, you must attach the GPIO plug to achieve the IP67 rating.

Lens Tube#

Without a lens tube, the camera achieves the IP50 rating, which is protection against dust but not water. With a lens tube from LUCID Vision Labs, the ATP200S achieves the IP67 rating, which is dust proof and water resistant.

Custom lens tubes must be qualified by the integrator to maintain the IP67 rating.

Adapter Ring#

The adapter ring is used to attach a lens tube onto the camera. When attaching the adapter ring, the C-mount barrel on the camera should also extend past the ring by approximately 0.3mm.

Use a rubber piece such as the Multipurpose Neoprene Rubber Strip from McMaster-Carr (part number 1372N13) to assist in removing the adapter ring.

Configuring the Camera and Host System#

Installing the Ethernet Driver#

LUCID Vision Labs recommends updating to the latest version of your Ethernet adapter’s driver before connecting your camera. You may need to navigate to the manufacturer’s website to find the latest version of the driver.

Device Discovery and Enumeration#

Lucid cameras are discovered and enumerated with the following process:

Camera IP Setup#

There are three methods used by LUCID cameras to obtain an IP address:

- Persistent – The camera uses a fixed IP address

- Dynamic Host Configuration Protocol (DHCP) – The camera is assigned an address from a DHCP server

- Link-Local Address (LLA) – The camera obtains an address in the Link-Local Address range from 169.254.1.0 to 169.254.254.255

Persistent IP and DHCP configurations can be disabled on the camera. The default camera configuration is as follows:

| Persistent IP | Disabled |

| DHCP | Enabled |

| LLA | Enabled (always enabled) |

Out of the box, the camera first attempts to connect using DHCP. If the camera is unable to connect using DHCP, it will use Link-Local Addressing.

Setting Up Persistent IP#

The following pseudocode demonstrates setting up persistent IP:

1 2 3 4 5 6 7 |

// Connect to camera

// Get device node map

GevCurrentIPConfigurationPersistentIP = true;

GevPersistentIPAddress = 192.168.0.10; //Enter persistent IP address for the camera

GevPersistentSubnetMask = 255.255.255.0;

GevPersistentDefaultGateway = 192.168.0.1;

|

Bandwidth Management#

Jumbo Frames#

LUCID Vision Labs recommends enabling jumbo frames on your Ethernet adapter. A jumbo frame is an Ethernet frame that is larger than 1500 bytes. Most Ethernet adapters support jumbo frames, however it is usually turned off by default.

Enabling jumbo frames on the Ethernet adapter allows a packet size of up to 9000 bytes to be set on the Atlas. The larger packet size will enable optimal performance on high-bandwidth cameras, and it usually reduces CPU load on the host system. Please note in order to set a 9000 byte packet size on the camera, the Ethernet adapter must support a jumbo frame size of 9000 bytes or higher.

The following table are some of the Ethernet adapters that LUCID Vision Labs has tested:

| Product Name | Maximum Jumbo Frame Size |

|---|---|

| ADLINK PCIe-GIE64+ | 9000 |

| Neousys PCIe-PoE354at | 9500 |

| Intel EXPI9301CT (non-POE) | 9000 |

| ioi GE10P-PCIE4XG301 | 16000 |

| ioi DGEAP2X-PCIE8XG302 | 16000 |

If you still experience issues such as lost packets or dropped frames, you can also try:

- Updating the Ethernet adapter driver (you may need to enable jumbo frames again after updating).

- Increasing the receive buffer size in your Ethernet adapter properties.

- Reducing the DeviceLinkThroughputLimit value (this may reduce maximum frame rate).

Receive Buffers#

A receive buffer is the size of system memory that can be used by the Ethernet adapter to receive packets. Some Ethernet adapter drivers or the operating system itself may set the receive buffer value to a low value by default, which may result in decreased performance. Increasing the receive buffer size, however, will also result in increased system memory usage.

Device Link Throughput Limit#

The Device Link Throughput Limit is the maximum available bandwidth for transmission of data represented in bytes per second. This can be used to control the amount of bandwidth used by the camera. The maximum available frame rate may decrease when this value is lowered since less bandwidth is available for transmission.

User Sets, Streamables, & File Access#

User Sets#

The ATP200S features two customizable user sets to load or save user-defined settings on the camera. Accessing the user set named Default will allow loading or saving of factory default settings. The camera will load the user set selected in UserSetDefault when powering up or when reset.

If the camera is acquiring images, AcquisitionStop must be called before loading or saving a user set.

Streamables#

A camera feature marked as Streamable allows the feature’s current value to be stored to and loaded from a file.

File Access#

The ATP200S features persistent storage for generic file access on the camera. This feature allows users to save and load a custom file up to 16 megabytes with UserFile. Users can also save and load User Set contents to and from a file.

Loading new firmware onto the camera may overwrite existing UserFile contents.

The following pseudocode demonstrates reading from the camera using file access:

1 2 3 4 5 6 7 8 9 10 |

// Connect to camera

// Get device node map

FileSelector = UserFile1;

FileOpenMode = Read;

FileOperationSelector = Open;

FileOperationExecute();

// Device sets FileOperationSelector = Close

// Read custom file from camera

FileOperationExecute();

// Device sets FileOperationSelector = Open

|

The following pseudocode demonstrates writing to the camera using file access:

1 2 3 4 5 6 7 8 9 10 |

// Connect to camera

// Get device node map

FileSelector = UserFile1;

FileOpenMode = Write;

FileOperationSelector = Open;

FileOperationExecute();

// Device sets FileOperationSelector = Close

// Write custom file to camera

FileOperationExecute();

// Device sets FileOperationSelector = Open

|

User Sets, Streamables, and File Access functions do not load or save camera IP configuration settings.

Using the Camera#

Using Arena SDK#

The Arena Software Development Kit (SDK) is designed from the ground up to provide customers with access to the latest in industry standards and computer technology. The SDK supports Lucid GigE Vision cameras on both Windows and Linux platforms.

Using ArenaView#

The Arena SDK includes Lucid’s easy to use GUI called ArenaView. Based on the GenICam standard, ArenaView allows you to access and validate camera features quickly and easily through the GenICam XML based feature tree. Optimized for today’s diverse range of user preferences, our viewer improves readability on higher resolutions and includes options for different color schemes.

For more information on Arena SDK and ArenaView, please refer to our website.

Using the Camera with Third Party Software#

Lucid’s cameras are compatible with many third party GigE Vision software packages including libraries from Cognex, MVTec, Matrox Imaging Library, Mathworks, and National Instruments. For more information on how to use your Atlas with other libraries, please refer to our 3rd Party Getting Software Guides.

Please refer to our 3rd Party Getting Software Guides for more info.

Camera Specifications#

Power#

The ATP200S can be powered via the Ethernet cable using Power over Ethernet (PoE) or the GPIO using the pins described in the GPIO Characteristics section.

When using PoE, the power supply must comply with the IEEE 802.3af standard. You can find recommended parts to power the camera in our web store.

Temperature#

The Atlas should be kept in the following storage, operating, and humidity conditions.

| Storage Temperature | -30 to 60°C |

| Operating Temperature | -20 to 55°C ambient |

| Humidity | Operating: 20% ~ 80%, relative, non-condensing |

Placing the camera outside of these conditions may result in damage to the device.

The Atlas camera is equipped with a built in temperature sensor that can be read by the DeviceTemperature property.

The camera can get hot to touch if it has been streaming images for an extended period of time.

DeviceTemperature can show values outside of the operating temperature range. This is generally acceptable as long as the camera is kept within the stated operating temperature range.

GPIO Characteristics#

GPIO Pinout Diagram

GPIO connector as seen from rear ATP200S view. Pin colors correspond to GPIO-M8 cable from LUCID Vision Labs.

| Pin Number | Pin Description |

|---|---|

| 1 (Red) | VAUX (12-24V DC Power Input) |

| 2 (Brown) | Non-isolated bi-directional GPIO channel (Line 2) |

| 3 (Orange) | VDD GPIO (3.5V Power Output) (Line 4) |

| 4 (Purple) | Non-isolated bi-directional GPIO channel (Line 3) |

| 5 (Black) | GND (Camera GND) |

| 6 (Blue) | OPTO GND (Opto-isolated Reference) |

| 7 (Yellow) | OPTO OUT (Opto-isolated Output) (Line 1) |

| 8 (Green) | OPTO IN (Opto-isolated Input) (Line 0) |

Maximum supported input voltage: 24V

Maximum supported output voltage: 24V

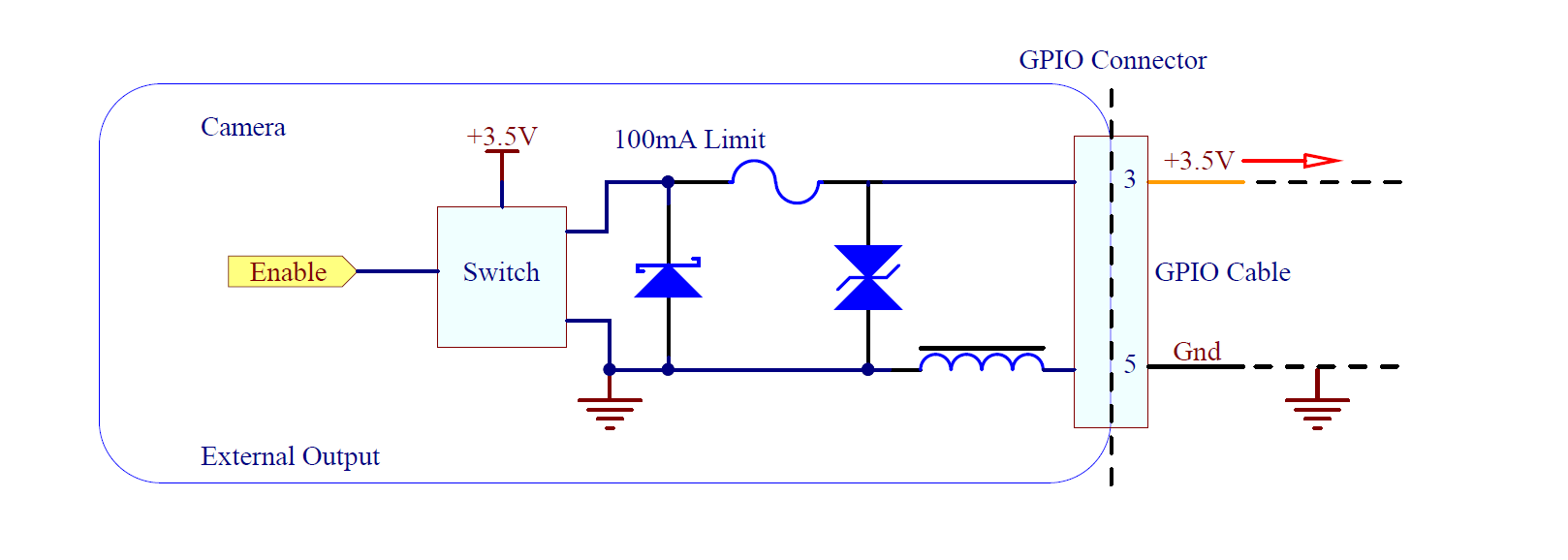

Consult the Turning on GPIO Voltage Output section for enabling VDD.

GPIO Schematics#

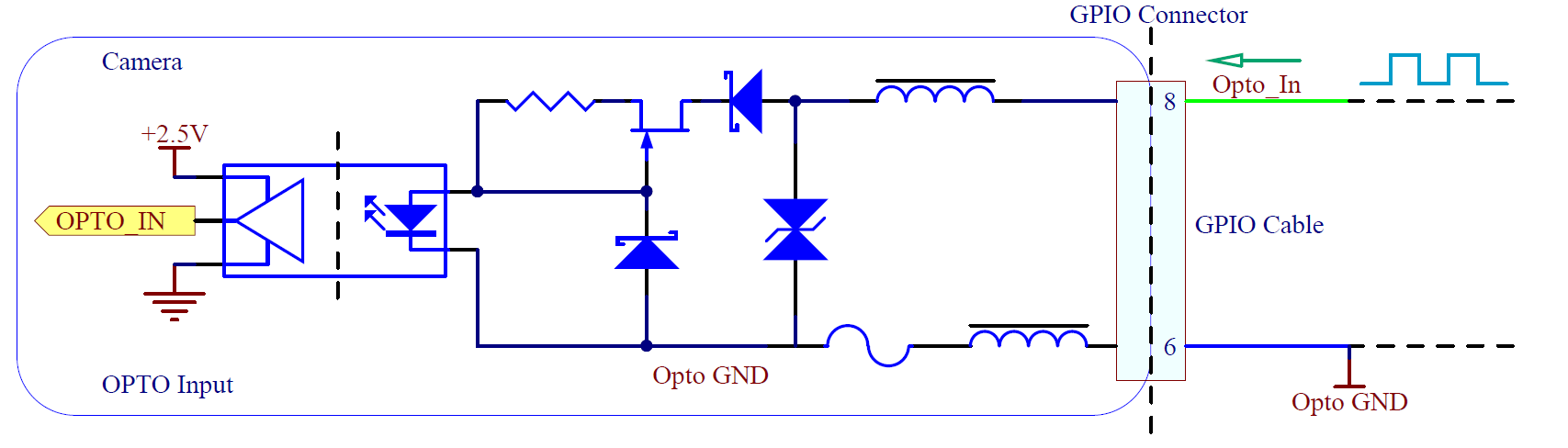

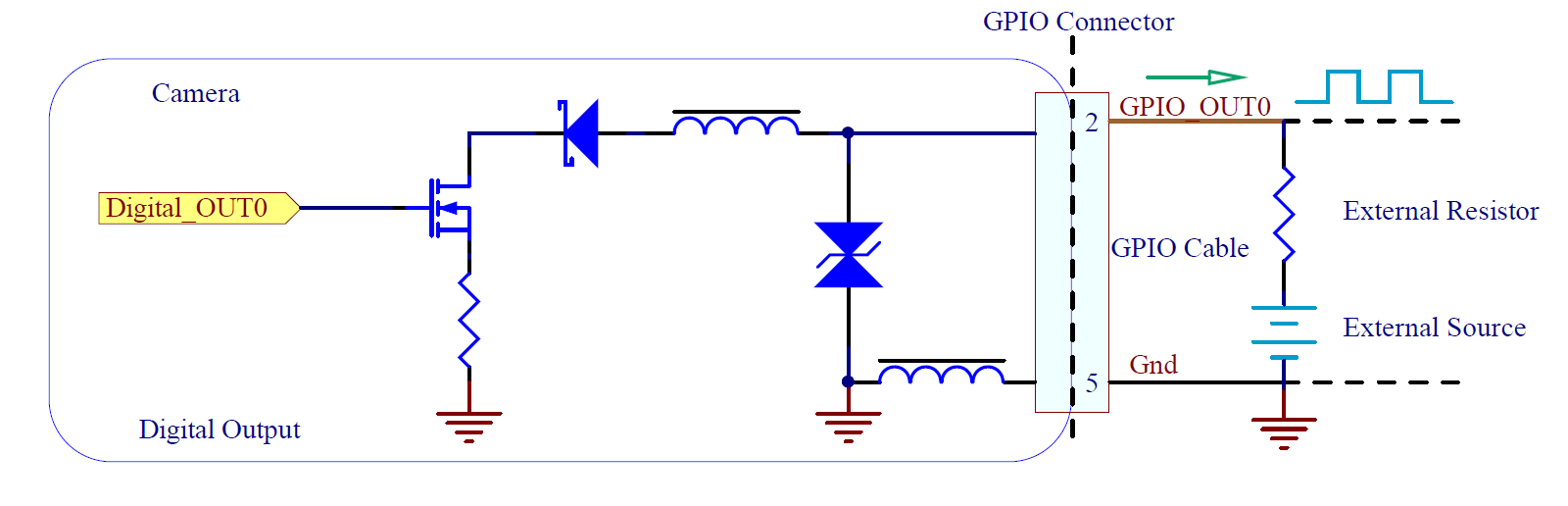

Opto-isolated Input – GPIO Line 0#

Opto-isolated Input Measurements:

| Voltage (V) | Max Rise Delay (us) | Max Fall Delay (us) | Max Rise Time (us) | Max Fall Time (us) | Min Pulse Input (us) | Min Input High (V) | Min Input Low (V) |

|---|---|---|---|---|---|---|---|

| 2.5 | 1 | 1 | 1 | 1 | 2 | 2.5 | 1.0 |

| 5 | 1 | 1 | 1 | 1 | 2 | 2.5 | 1.0 |

Sample values measured at room temperature. Results may vary over temperature and setup.

Maximum supported input voltage: 24V

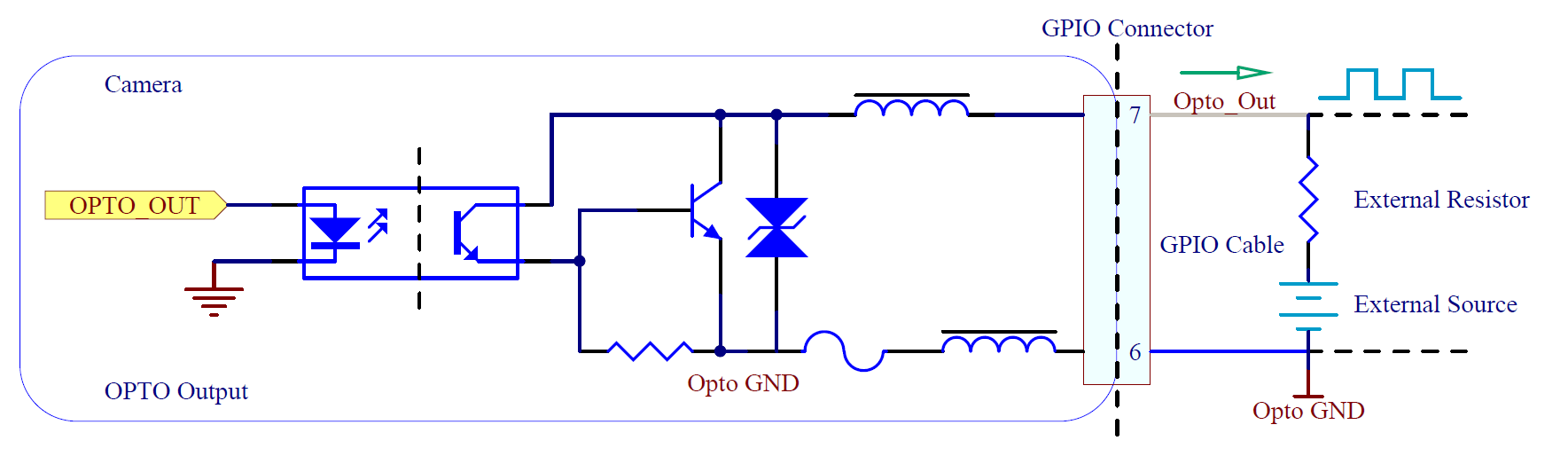

Opto-isolated Output – GPIO Line 1#

Opto-isolated Output Measurements:

| Voltage (V) | External Resistor (Ω) | Max Rise Delay (us) | Max Fall Delay (us) | Max Rise Time (us) | Max Fall Time (us) | Current (mA) | Low Level (V) |

|---|---|---|---|---|---|---|---|

| 2.5 | 150 | 50 | 5 | 40 | 5 | 5.7 | 0.9 |

| 2.5 | 330 | 50 | 5 | 40 | 5 | 2.9 | 0.8 |

| 2.5 | 560 | 50 | 5 | 40 | 5 | 1.9 | 0.5 |

| 2.5 | 1k | 50 | 5 | 40 | 5 | 1.2 | 0.3 |

| 5 | 330 | 50 | 5 | 50 | 5 | 6.6 | 0.9 |

| 5 | 560 | 50 | 5 | 50 | 5 | 4 | 0.7 |

| 5 | 1k | 50 | 5 | 50 | 5 | 2.4 | 0.5 |

| 5 | 1.8k | 50 | 5 | 50 | 5 | 1.4 | 0.4 |

| 12 | 1k | 50 | 5 | 60 | 5 | 6 | 0.9 |

| 12 | 1.8k | 50 | 5 | 60 | 5 | 3.4 | 0.9 |

| 12 | 2.7k | 50 | 5 | 60 | 5 | 2.4 | 0.7 |

| 12 | 4.7k | 50 | 5 | 60 | 5 | 1.5 | 0.5 |

| 24 | 1.8k | 60 | 5 | 60 | 5 | 7.1 | 0.9 |

| 24 | 2.7k | 60 | 5 | 60 | 5 | 4.7 | 0.9 |

| 24 | 4.7k | 60 | 5 | 60 | 5 | 2.8 | 0.7 |

| 24 | 6.8k | 60 | 5 | 60 | 5 | 2.1 | 0.6 |

Sample values measured at room temperature. Results may vary over temperature and setup.

Maximum supported output voltage: 24V

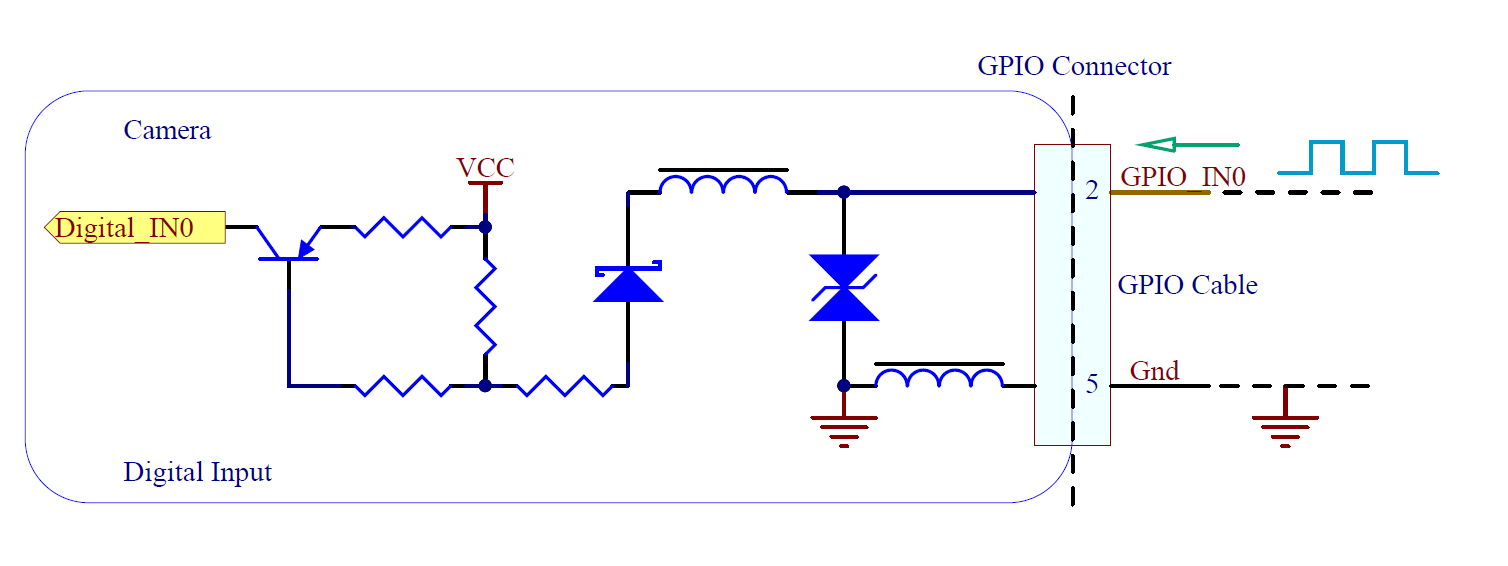

Non-isolated Input - GPIO Line 2#

Non-isolated Input Measurements:

| Voltage (V) | Max Rise Delay (us) | Max Fall Delay (us) | Max Rise Time (us) | Max Fall Time (us) | Min Pulse Input (us) | Min Input High (V) | Min Input Low (V) |

|---|---|---|---|---|---|---|---|

| 2.5 | 1 | 1 | 1 | 1 | 2 | 2.5 | 0.5 |

| 5 | 1 | 1 | 1 | 1 | 2 | 2.5 | 0.5 |

Typical values measured at room temperature. Results may vary over temperature.

Maximum supported input voltage: 24V

Non-isolated Output - GPIO Line 2#

Non-isolated Output Measurements:

| Voltage (V) | External Resistor (Ω) | Max Rise Delay (us) | Max Fall Delay (us) | Max Rise Time (us) | Max Fall Time (us) | Current (mA) | Low Level (V) |

|---|---|---|---|---|---|---|---|

| 2.5 | 150 | 0.5 | 0.5 | 1 | 0.5 | 4.3 | 1.3 |

| 2.5 | 330 | 0.5 | 0.5 | 1 | 0.5 | 2.6 | 1 |

| 2.5 | 560 | 0.5 | 0.5 | 1 | 0.5 | 1.8 | 0.8 |

| 2.5 | 1k | 0.5 | 0.5 | 1 | 0.5 | 1.1 | 0.6 |

| 5 | 330 | 0.5 | 0.5 | 1 | 0.5 | 5.6 | 1.4 |

| 5 | 560 | 0.5 | 0.5 | 1 | 0.5 | 3.7 | 1.1 |

| 5 | 1k | 0.5 | 0.5 | 1 | 0.5 | 2.3 | 0.9 |

| 5 | 1.8k | 0.5 | 0.5 | 1 | 0.5 | 1.4 | 0.7 |

| 12 | 1k | 0.5 | 0.5 | 1 | 0.5 | 5.5 | 1.4 |

| 12 | 1.8k | 0.5 | 0.5 | 1 | 0.5 | 3.2 | 0.9 |

| 12 | 2.7k | 0.5 | 0.5 | 1 | 0.5 | 2.3 | 0.9 |

| 12 | 4.7k | 0.5 | 0.5 | 1 | 0.5 | 1.5 | 0.7 |

| 24 | 1.8k | 0.5 | 0.5 | 2 | 0.5 | 6.5 | 1.6 |

| 24 | 2.7k | 0.5 | 0.5 | 2 | 0.5 | 4.5 | 1.3 |

| 24 | 4.7k | 0.5 | 0.5 | 2 | 0.5 | 2.6 | 0.9 |

| 24 | 6.8k | 0.5 | 0.5 | 2 | 0.5 | 1.8 | 0.8 |

Typical values measured at room temperature. Results may vary over temperature.

Maximum supported output voltage: 24V

Non-isolated Input - GPIO Line 3#

Same as Non-isolated Input – GPIO Line 2 (GPIO_IN is Pin 4, GND is Pin 5)

Non-isolated Output - GPIO Line 3#

Same as Non-isolated Output – GPIO Line 2 (GPIO_OUT is Pin 4, GND is Pin 5)

3.5V Output#

LED Status#

The Atlas camera is equipped with an LED that identifies the current state of the camera.

| LED Status | Status Information |

|---|---|

| Flashing red | Camera powered, but no Ethernet link established. |

| Flashing green | Camera powered, Ethernet link established, but no network traffic. |

| Solid green | Camera powered, Ethernet link established, and there is network traffic. |

| Flashing red/green | Firmware update in progress. |

| Solid red | Error. Firmware update failed. |

The following LED sequence occurs when the camera is powered up and connected to a network:

- LED off, plug in the Ethernet cable.

- LED on, flashing red.

- After link is established, LED becomes flashing green.

- Launch application and start capturing images, LED becomes solid green.

The status LED can also be controlled using the DeviceIndicatorMode property. Possible values are:

- Inactive: LED is off.

- Active: LED indicates camera status according to the above table.

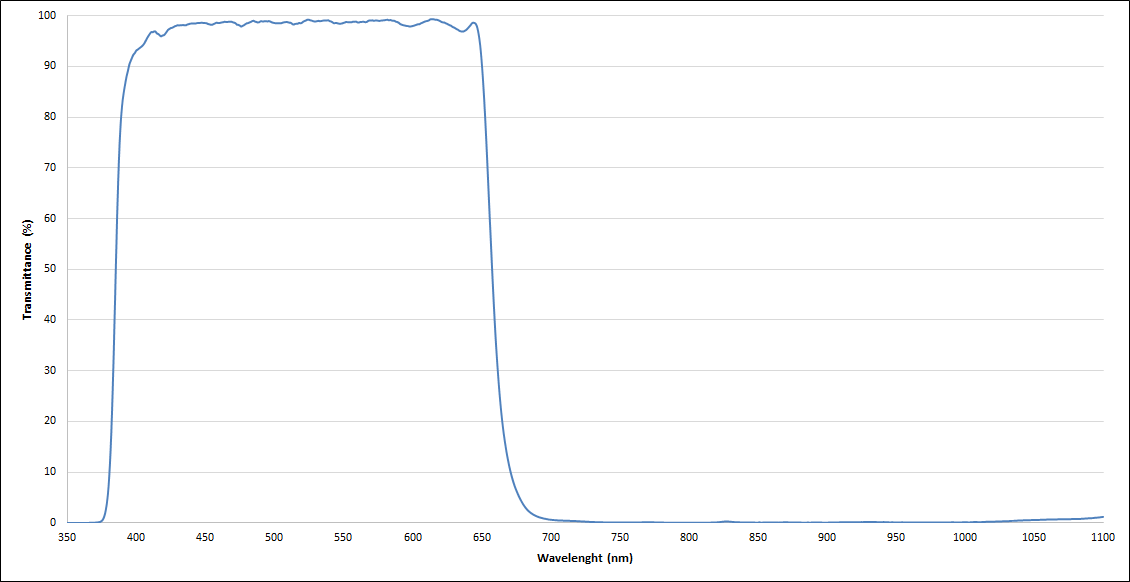

IR Filter#

Color cameras from LUCID Vision Labs are equipped with an IR filter that is installed under the gasket of the mount. Mono cameras are equipped with a transparent glass window instead of an IR filter. The dimensions of the IR filter / transparent glass window are as follows:

| Model | Size | Thickness |

|---|---|---|

| 55 x 55mm Atlas/Atlas10 TFL-Mount | 27.3 x 22.3 | 1mm |

| 55 x 55mm Atlas/Atlas10 C-Mount | 18 x 14.5mm | 1mm |

The IR filter and transparent glass window have anti-reflective coating on one side.

Specification Tests#

FCC#

This product has been tested and complies with the limits for a Class A digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial environment. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instruction manual, may cause harmful interference to radio communications. Operation of this equipment in a residential area is likely to cause harmful interference, in which case the user will be required to correct the interference at his own expense.

Users are advised that any changes or modifications not approved by LUCID Vision Labs will void the FCC compliance. The product is intended to be used as a component of a larger system, hence users are advised that cable and other peripherals may affect overall system FCC compliance.

RoHS, REACH, and WEEE#

LUCID Vision Labs declares the ATP200S camera is in conformity of the following directives:

- RoHS 2011/65/EC

- REACH 1907/2006/EC

- WEEE 2012/19/EC

CE#

LUCID Vision Labs declares the ATP200S meets requirements necessary for CE marking. The product complies with the requirements of the listed directives below:

- EN 55032:2012 Electromagnetic compatibility of multimedia equipment – Emission requirements

- EN 61000-3-2:2014 Harmonic Current Emissions

- EN 61000-3-3:2013 Voltage Fluctuations and Flicker

- EN 61000-4-2:2008 Electrostatic Discharge

- EN 61000-4-3:2010 Radiated RF Immunity

- EN 61000-4-4:2012 Electrical Fast Transient/Burst

- EN 61000-4-5:2014 Surge Transient

- EN 61000-4-6:2013 Conducted Immunity

- EN 61000-4-8:2009 Power Frequency Magnetic Field

- EN 61000-4-11:2004 Voltage Dips and Interruptions

Korean Communications Commission (KCC) statement#

A급 기기

(업무용 방송통신기자재)

Class A Equipment (Industrial Broadcasting & Equipment)

이 기기는 업무용(A급) 전자파적합기기로서 판

매자 또는 사용자는 이 점을 주의하시기 바라

며. 가정외의 지역에서 사용하는 것을 목적으로

합니다.

This is electromagnetic wave compatibility equipment for business (Class A). Sellers and users need to pay attention to it. This is for any areas other than home.

Shock and Vibration#

To ensure the camera’s stability, LUCID’s ATP200S camera is tested under the following shock/vibration conditions. After the testing, the camera showed no physical damage and could produce normal images during normal operation.

| Test | Standard | Parameters |

|---|---|---|

| Shock | DIN EN 60068-2-27 | Each axis (x/y/z), 20g, 11ms, +/- 10 shocks |

| Bump | DIN EN 60068-2-27 | Each axis (x/y/z), 20g, 11ms, +/- 100 bumps |

| Vibration (random) | DIN EN 60068-2-64 | Each axis (x/y/z), 4.9g rms, 15-500Hz, 0.05g2/Hz acceleration, 30min per axis |

| Vibration (sinusoidal) | DIN EN 60068-2-6 | Each axis (x/y/z), 10-58Hz: 1.5mm, 58-500 Hz: 10g, 1 oct/min, 1 hour 52 min per axis |

To protect the Atlas camera against shock and vibration, the camera should be mounted by securing all four M4 mounting holes on the bottom of the camera.

Camera Features#

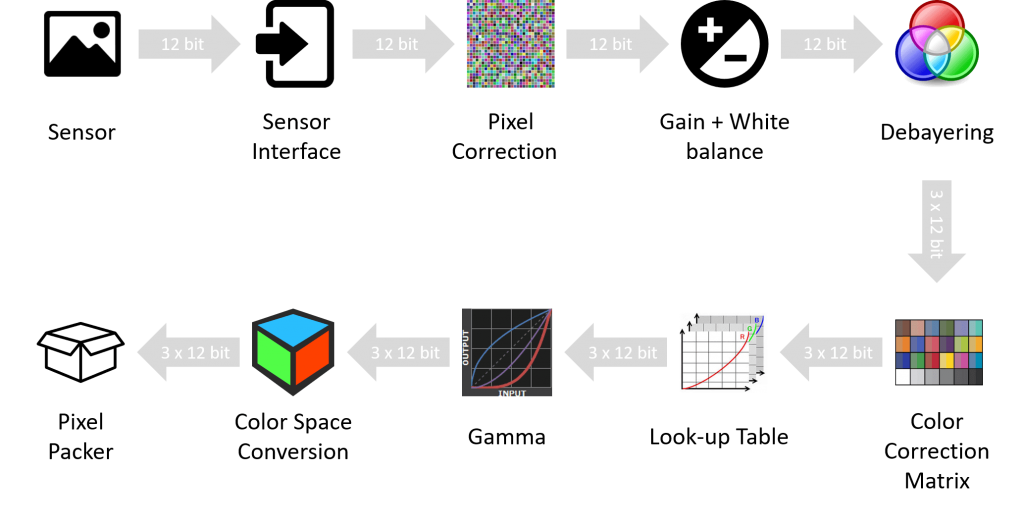

Image Processing Controls#

The ATP200S camera is equipped with the following image processing control flow.

The details of each of the image processing controls are described below.

Defect Pixel Correction#

The ATP200S supports a list of pixel coordinates to be corrected via firmware. For the list of pixel coordinates, their actual pixel values are replaced by interpolation of their neighboring pixel values. The camera has a preloaded pixel correction list and these pixels are loaded during the camera manufacturing process. It is natural that sensors come with defective pixels and they are inevitable in the semi-conductor manufacturing process. As the camera operates longer in heat or is exposed to radiation, more defective pixels may appear. Users can update the pixel correction list any time.

Steps to add a new pixel to the correction list

- Set OffsetX and OffsetY to zero. Set Width and Height to the maximum allowed value.

- Set Gain to zero and note the coordinates of any bright pixels in the image. Please ensure the camera is not exposed to light by covering it with a lens cap and placed in a dark box.

- Fire the DefectCorrectionGetNewDefect command.

- Enter the X-coordinate noted in step 2 into DefectCorrectionPositionX.

- Enter the Y-coordinate noted in step 2 into DefectCorrectionPositionY.

- Fire the DefectCorrectionApply command.

- Repeat steps 3-6 as needed and fire the DefectCorrectionSave when done.

Pixel correction is still applied if the image geometry changes (e.g. applying ReverseX, ReverseY, a region of interest, or binning).

Table below shows the maximum number of defective pixels that can be added to the correction list.

| Model | List Length |

|---|---|

| ATL028S | 512 |

| ATL050S | 128 |

| ATP071S/ATL071S | 512 |

| ATL089S | 128 |

| ATL120S | 128 |

| ATL168S | 512 |

| ATL196S | 512 |

| ATL314S | 512 |

The following pseudocode demonstrates adding a defective pixel to the correction list:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

// Connect to camera

// Get device node map

// Set maximum width and height

OffsetX = 0;

OffsetY = 0;

Width = Max Width;

Height = Max Height;

// Set constant ExposureTime and Gain

DefectCorrectionGetNewDefect();

DefectCorrectionPositionX = 25; // The X-coordinate of the blemish pixel

DefectCorrectionPositionY = 150; // The Y-coordinate of the blemish pixel

DefectCorrectionApply();

// Repeat the above four steps as needed. When complete:

DefectCorrectionSave();

|

Gain#

Gain refers to a multiplication factor applied to a signal to increase the strength of that signal. On Lucid cameras, gain can be either manually adjusted or automatically controlled.

Some cameras feature gain that is purely digital while others allow for analog gain control up to a certain value, beyond which the gain becomes digital. Depending on the camera family and sensor model, the specific gain control can vary.

Analog Gain

Analog Gain refers to amplification of the sensor signal prior to A/D conversion.

Digital Gain

Digital Gain refers to amplification of the signal after digitization.

| Model | Conversion Gain | Analog | Digital |

|---|---|---|---|

| ATP028S/ATL028S | HCG 7.2dB | 0-24dB | 24-48dB |

| ATL050S | NA | 0-24dB | 24-48dB |

| ATP071S/ATL071S | HCG 7.2dB | 0-24dB | 24-48dB |

| ATL089S | NA | 0-24dB | 24-48dB |

| ATL120S | NA | 0-24dB | 24-48dB |

| ATL168S | NA | 0-24dB | 24-48dB |

| ATL196S | NA | 0-24dB | 24-48dB |

| ATL314S | NA | 0-24dB | 24-48dB |

The following pseudocode demonstrates setting Gain to 12 dB:

1 2 3 4 |

// Connect to camera

// Get device node map

GainAuto = Off;

Gain = 12;

|

Color Processing#

The Atlas camera is equipped with a debayering core within the image processing pipeline. The color processing core enables the camera to output a color processed image format in addition to the unprocessed Bayer-tiled image. Currently the camera supports the RGB8 pixel format which outputs 8-bits of data per color channel for a total of 24-bits per pixel. Due to the amount of bits per pixel, the total image size for RGB8 would be 3 times larger when compared to an 8-bit image. This increase in image data size per frame would result in a reduction of average frame rate for the camera.

The following pseudocode demonstrates configuring the camera to RGB8 pixel format:

1 2 3 |

// Connect to camera

// Get device node map

PixelFormat = PixelFormat_RGB8;

|

White Balance#

The white balance module aims to change the balance between the Red, Green and Blue channels such that a white object appears white in the acquired images. LUCID Vision Labs cameras allow for manual white balance adjustment by the user, or automatic white balance adjustment based on statistics of previously acquired frames. Different external illuminations and different sensors may render acquired images with color shift. The White Balance module allows the user to correct for the color shift by adjusting gain value of each color channel.

LUCID Vision Labs offers two types of white balance algorithm as described below. Both methods below allow for user controlled anchor points or reference points, from which multipliers are computed for each channel. The different anchor points are summarized below.

| Anchors | Information |

|---|---|

| Min | The lowest luminance channel is used as reference while other channels are adjusted to match it. There is no chance of overflowing the pixels, however the image is darkened. |

| Max | The highest luminance channel is used as reference while other channels are adjusted to match it. There is a chance of overflowing the pixels. |

| Mean | The mean value of all channels is used as reference while all channels are adjusted to match the mean. There is a smaller chance of overflowing. |

| Green | Green channel is used as the reference while the Red and Blue are adjusted. |

Grey World

A grey world assumes that the average of all colors in an image is a neutral grey.

White Patch

White patch has the same idea as Grey World, but only considering a section of the image (i.e. the section being the white patches). A simple way to determine such section(s) of the image is to indicate a pixel as white when R+G+B is greater than the threshold pixel value. Determining the threshold can be done using a 90% percentile of previous image. There is also a need for an additional threshold to exclude saturated pixels for better white balance adjustment.

Look-Up Table (LUT)#

Look-Up Table also known as LUT is used for mapping 12-bit user-specified pixel values to replace 12-bit raw sensor pixel values. Users input values for the even indices including the last index 4095 while averaging is used to calculate rest of the odd indices. So there are in total 2049 effective input entries; 2048 even (e.g., 0, 2, 4, …, 4092, 4094) + 1 odd (4095). Index value 0 correspond to black color while the index value 4095 correspond to white color.

To build a LUT, users input index values (e.g., 0, 2, 4, …, 4092, 4094, 4095) that need to be replaced in the LUTIndex field and the corresponding new value in the LUTValue field. For the odd index values in the gap (e.g., 1,3,5 …,4089, 4091, 4093), their mapped value is calculated by taking the average of their neighbor mapped values (e.g., If the input mappings are LUTIndex = 1090 -> LUTValue = 10 and LUTIndex = 1092 -> LUTValue = 20, then the mapped value of LUTIndex = 1091 will be LUTValue = 15)

To reset Look-Up Table, please execute LUTReset command

The following pseudocode demonstrates replacing black pixel values with white pixel values:

1 2 3 4 5 6 7 |

// Connect to camera

// Get device node map

LUTEnable = true;

LUTIndex = 0; //Pixel value to be replaced, in this case black

LUTValue = 4095; //New pixel value, in this case white

LUTSave();

|

Gamma#

The gamma control allows the optimization of brightness for display. LUCID implements Gamma using GenICam standard, that is

![]()

where,

- X = New pixel value; 0 <= X <=1

- Y = Old pixel value; 0 <= Y <=1

- Gamma = Pixel intensity: 0.2 <= Gamma <= 2

Y in the Gamma formula is scaled down to [0-1] from original pixel range which results in a pixel range of [0-1] for X. As an example, for 12-bit pixel formats, this would mean scaling down pixel range from [0-4095] to [0-1] and for 16-bit pixel formats, this would mean scaling down pixel range from [0-65535] to [0-1].

The camera applies gamma correction values to the intensity of each pixel. In general, gamma values can be summarized as follows:

- Gamma = 1: brightness is unchanged.

- 1 <= Gamma <= 2: brightness decreases.

- 0.2 <= Gamma <= 1: brightness increases.

Color Space Conversion and Correction#

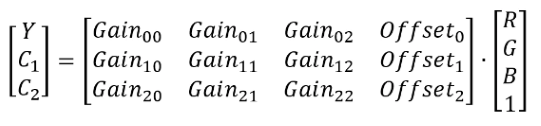

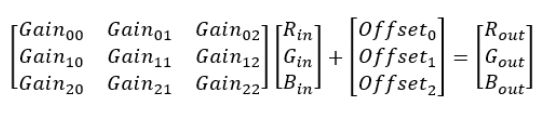

The Color Space Conversion control allows the user to convert from RGB color space to another color space such as YUV. The conversion is done in a linear manner as shown in the following equation.

The color correction function allows the user to choose between a few preset values or user-configurable matrix values. The color correction is done by allowing the multiplication of a 3×3 matrix to the 3×1 matrix containing R, G and B pixel values to achieve a more desirable R’, G’ and B’ values. The specific mathematical procedure can be represented by the following.

- ColorTransformationEnable is a node that indicates whether the conversion matrix of the color space conversion module is used or bypassed.

- When the pixel format is YUV or YCbCr, ColorTransformationSelector is displayed as RGBtoYUV. When the pixel format is Mono, ColorTransformationSelector is displayed as RGBtoY.

- ColorTransformationValueSelector chooses which coefficient in the conversion matrix and the coefficient value is shown in ColorTransformationValue. In Mono, YUV or YCbCr pixel formats, ColorTransformationValue is read only.

Image Format Controls#

The ATP200S camera is equipped with the following image format control capabilities.

Region of Interest (ROI)#

The region of interest feature allows you to specify which region of the sensor is used for image acquisition. This feature allows a custom width and height for image size and a custom X and Y offset for image position. The width and height must be a multiple of the minimum width and height values allowed by the camera.

The following pseudocode demonstrates configuring the camera to use a region of interest of 200×200 at offset (250,150):

1 2 3 4 5 6 |

// Connect to camera

// Get device node map

OffsetX = 250;

OffsetY = 150;

Width = 200;

Height = 200;

|

If the camera is acquiring images, AcquisitionStop must be called before changing region of interest settings.

Binning#

The ATP200S camera supports binning in which columns and/or rows of pixels are combined to achieve a reduced overall image size without changing the image’s field of view. This feature may result in an increase of camera’s frame rate.

The binning factor indicates how many pixels in the horizontal and vertical axis are combined. For example, when applying 2×2 binning, which is 2 pixels in the horizontal axis and 2 pixels in the vertical axis, 4 pixels combine to form 1 pixel. The resultant pixel values can be summed or averaged.

When binning is used, the settings of the image width and height will be affected. For example, if you are using a camera with sensor resolution of 2448 x 2048 and apply 2×2 binning, the effective resolution of the resultant image is reduced to 1224 x 1024. This can be verified by checking the Width and Height nodes.

The following pseudocode demonstrates configuring binning on the camera:

1 2 3 4 5 6 7 8 9 10 11 |

// Connect to camera

// Get device node map

BinningSelector = Digital; // Digital binning is performed by FPGA, some cameras will support BinningSelector = Sensor

BinningHorizontalMode = Sum; // Binned horizontal pixels will be summed (additive binning)

BinningVerticalMode = Sum; // Binned vertical pixels will be summed (additive binning)

BinningHorizontal = 2; // Set Horizontal Binning by a factor of 2

BinningVertical = 2; // Set Vertical Binning by a factor of 2

// Resulting image is 1/4 of original size

|

When horizontal binning is used, horizontal decimation (if supported) is not available. When vertical binning is used, vertical decimation (if supported) is not available.

If the camera is acquiring images, AcquisitionStop must be called before adjusting binning settings.

ADC bit mode#

Three ADC bit modes available in this model are 8 Bit, 10 Bit and 12 Bit (12 Bit only for ATP200S). As a result of sensor being clocked differently for each of these bit modes, maximum frame rate achieved is different in each mode.

Following table shows maximum frame rate achieved using Mono8 pixel format.

| Atlas model | 8 Bit (FPS) | 10 Bit (FPS) | 12 Bit (FPS) |

|---|---|---|---|

| ATP028S/ATL028S | 173 | 143 | 121 |

| ATL050S | 98 | 80 | 68 |

| ATP071S/ATL071S | 74.6 | 60.5 | 50.9 |

| ATP089S/ATL089S | 58.5 | 47.4 | 40 |

| ATP120S/ATL120S | 42.5 | 34.5 | 29.1 |

| ATL168S | 32.9 | 26.6 | 22.3 |

| ATL196S | 27.9 | 22.7 | 19.1 |

| ATP2000S | N/A | N/A | 17.9 |

| ATL314S | 17.9 | 14.4 | 12.1 |

Decimation#

The camera supports decimation in which columns and/or rows of pixel are skipped to achieve reduced overall image size without changing the image’s field of view. This feature is also known as “subsampling” due to the smaller sample size of pixels the camera transmits. This feature may result in an increase of camera’s frame rate.

When decimation is used, the settings of the image width and height will be effected. For example, if you are using a camera with sensor resolution of 2448 x 2048. When horizontal and vertical decimation are both set to 2, the effective resolution of the resultant image is reduced to 1224 x 1024. This can be verified by checking the Width and Height nodes.

The following pseudocode demonstrates configuring decimation on the camera:

1 2 3 4 5 6 7 |

// Connect to camera

// Get device node map

DecimationHorizontal = 2; //Set Horizontal Decimation by factor of 2

DecimationVertical = 2; // Set Vertical Decimation by factor of 2

// Resulting image is 1/4 of original size

|

When horizontal decimation is used, horizontal binning (if supported) is not available. When vertical decimation is used, vertical binning (if supported) is not available.

If the camera is acquiring images, AcquisitionStop must be called before adjusting decimation settings.

Horizontal and Vertical Flip#

This feature allows the camera to flip the image horizontally and vertically. The flip action occurs on the camera before transmitting the image to the host.

The following pseudocode demonstrates configuring the camera to flip both horizontal and vertical axes:

1 2 3 4 |

// Connect to camera

// Get device node map

ReverseX = True;

ReverseY = True;

|

If the camera is acquiring images, AcquisitionStop must be called before changing horizontal or vertical flip.

Test Pattern#

The camera outputs a FPGA-generated pattern when test pattern is enabled.

Digital IO#

The ATP200S’s Digital IO controls input and output lines that can be utilized with external circuitry for synchronization with other devices. An example use of an input line is to allow the camera to take an image upon receipt of an internal software signal or an external pulse (rising or falling edge). An example use of an output line is to fire a pulse when the camera starts integration for the duration of the current ExposureTime value.

The Digital IO lines correspond to the Atlas’ GPIO pins. Please consult the GPIO Cable section for more information on the required cable for the GPIO connector and the GPIO Characteristics section for a GPIO pinout diagram.

Configuring an Input Line#

When a Digital IO line is set to Input, the line can accept external pulses. To trigger the camera upon receipt of an external pulse, the camera must also have trigger mode enabled.

The following pseudocode demonstrates enabling trigger mode and setting Line0 as the trigger input source.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

// Connect to camera

// Get device node map

// Choose Line0 and set it to Input

LineSelector = Line0;

LineMode = Input;

TriggerMode = On;

TriggerSelector = FrameStart; // Trigger signal starts a frame capture

TriggerSource = Line0; // External signal to be expected on Line0

TriggerActivation = FallingEdge; // Camera will trigger on the falling edge of the input signal

AcquisitionStart();

// Acquire images by sending pulses to Line0

|

It is also possible to set software as the input source. This will enable the camera to trigger upon a software signal. Note this mechanism may not be as accurate as using an external trigger source.

The following pseudocode demonstrates enabling trigger mode and setting a software trigger source.

1 2 3 4 5 6 7 8 9 10 |

// Connect to camera

// Get device node map

TriggerMode = On;

TriggerSelector = FrameStart; // Trigger signal starts a frame capture

TriggerSource = Software; // Software signal will trigger camera

AcquisitionStart();

TriggerSoftware(); // Execute TriggerSoftware to signal the camera to acquire an image

|

TriggerOverlap#

By default, the ATP200S will reject input pulses until the last triggered image has completed the readout step on the sensor. This may limit the maximum achievable trigger frequency on some cameras when compared to maximum non-triggered FrameRate.

To address this situation, the ATP200S also supports TriggerOverlap functionality. When TriggerOverlap is enabled, this allows the camera to accept an input pulse before the readout step is complete. This allows the camera to be triggered at frequencies closer to the maximum non-triggered FrameRate.

Some Digital IO lines may be Input only (e.x. an opto-isolated input). Consult the GPIO Characteristics section for a GPIO pinout diagram.

Configuring an Output Line#

When a Digital IO line is set to Output, the line can fire pulses.

The following pseudocode demonstrates setting Line1 as the output source. This sets up the camera to fire a pulse when the camera is acquiring an image.

1 2 3 4 5 6 7 8 9 10 11 |

// Connect to camera

// Get device node map

// Choose Line1 and set it to Output

LineSelector = Line1;

LineMode = Output;

LineSource = ExposureActive; // The output pulse will match the duration of ExposureTime

AcquisitionStart();

// Acquire images and process signal from Line1

|

Opto-isolated outputs will require external circuitry to be properly signaled. Some Digital IO lines may be Output only (e.x. an opto-isolated output). Consult the GPIO Characteristics section for a GPIO pinout diagram.

Turning on GPIO Voltage Output#

The Atlas is capable of supplying external circuits with power through the VDD line. By default this line is turned off. The following pseudocode demonstrates enabling the GPIO VDD line to output 2.5V.

1 2 3 4 5 6 7 8 |

// Connect to camera

// Get device node map

// Choose Line4 and set it to output external voltage

LineSelector = Line4;

VoltageExternalEnable = True;

// Continue with the rest of the program...

|

Consult the GPIO Characteristics section for a GPIO pinout diagram.

Chunk Data#

Chunk data is additional tagged data that can be used to identify individual images. The chunk data is appended after the image data.

Extracting the Image CRC checksum with ChunkCRC#

When the chunk data property named CRC is enabled, the camera tags a cyclic-redundancy check (CRC) checksum that is calculated against the image payload.

The following pseudocode demonstrates enabling the CRC property in chunk data and extracting the ChunkCRC chunk from acquired images:

1 2 3 4 5 6 7 8 9 10 11 |

// Connect to camera

// Get device node map

ChunkModeActive = True;

ChunkSelector = CRC;

ChunkEnable = True;

AcquisitionStart();

// Acquire image into buffer

// Read chunk data from received buffer

bufferCRC = ChunkCRC; // CRC checksum for image payload

|

If the camera is acquiring images, AcquisitionStop must be called before enabling or disabling chunk data.

Transfer Control#

Transfer Control allows the device to accumulate images on the on-camera buffer in a queue. The data stored in the queue, referred to as blocks, can be transmitted to the host application at a later time. The host application will be able to request the device transmit one or more block. By default, this control is disabled on the ATP200S and acquired images are automatically transmitted.

Automatic Transfer Control#

When using Automatic Transfer Control mode, the transfer of blocks to the host are controlled the device’s acquisition controls.

In Automatic Transfer Control mode, the TransferOperationMode is read only and set to Continuous. Using Continuous TransferOperationMode is similar to when Transfer Control is not enabled, except the host application can stop data transmission without stopping image acquisition on the device.

The following pseudocode demonstrates enabling Automatic Transfer Control mode on the camera.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

// Connect to camera

// Get device node map

// Set desired Pixel Format, Width, Height

// Optional: Read TransferQueueMaxBlockCount to determine the number of blocks

// that can be stored in the on-camera buffer with the current device settings

TransferControlMode = Automatic;

TransferQueueMode = FirstInFirstOut;

AcquisitionStart(); // When in Automatic Transfer Control Mode, the

// AcquisitionStart command automatically executes the TransferStart command

// Acquire images

// Images will be accumulating into the on-camera buffer

// Blocks will be transmitted to the host system automatically until TransferPause or AcquisitionStop is executed

// Retrieve images on host system

|

In Automatic Transfer Control mode, the following commands are available:

- TransferPause to pause transfer of blocks without executing TransferStop or AcquisitionStop.

- TransferResume to resume transfer of blocks after TransferPause has been called.

- TransferAbort to abort transfer of blocks.

- TransferStop to stop transfer of blocks.

When blocks are not transmitted from the on-camera buffer to the host quickly enough and the amount of blocks to be stored in the buffer exceeds TransferQueueMaxBlockCount, the new images will will be dropped. Set TransferStatusSelector to QueueOverflow and read TransferStatus to determine if a block is lost.

UserControlled Transfer Control#

When using UserControlled Transfer Control mode, the transfer of blocks to the host are controlled by the host application. Using MultiBlock TransferOperationMode with UserControlled Transfer Control allows the host application to specify when to transmit data from the device.

The following pseudocode demonstrates enabling UserControlled Transfer Control mode with MultiBlock TransferOperationMode on the camera. It also demonstrates transferring 2 blocks from the on-camera buffer for each transmission operation.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

// Connect to camera

// Get device node map

// Set desired PixelFormat, Width, Height

// Optional: Read TransferQueueMaxBlockCount to determine the number of blocks

// that can be stored in the on-camera buffer with the current device settings

TransferControlMode = UserControlled;

TransferQueueMode = FirstInFirstOut;

TransferOperationMode = MultiBlock; // Transmit the number of blocks in TransferBlockCount

TransferBlockCount = 2; // This should be less than or equal to TransferQueueMaxBlockCount

AcquisitionStart();

// Acquire images

// Images will be accumulating into the on-camera buffer

// Read TransferQueueCurrentBlockCount

If (TransferQueueCurrentBlockCount > 0)

{

// Transmit blocks to host system

TransferStart();

// Retrieve block 1 on host system

// Retrieve block 2 on host system

TransferStop();

}

|

In UserControlled Transfer Control mode, the following commands are available:

- TransferPause to pause transfer of blocks without executing TransferStop or AcquisitionStop.

- TransferResume to resume transfer of blocks after TransferPause has been called.

- TransferAbort to abort transfer of blocks.

- TransferStop to stop transfer of blocks.

When blocks are not transmitted from the on-camera buffer to the host quickly enough and the amount of blocks to be stored in the buffer exceeds TransferQueueMaxBlockCount, the new images will will be dropped. Set TransferStatusSelector to QueueOverflow and read TransferStatus to determine if a block is lost.

To turn off the Transfer Control mechanism, set the TransferControlMode to Basic.

If the camera is acquiring images, AcquisitionStop must be executed before changing Transfer Control properties.

Event Control#

Events are notifications generated by the camera to inform host application about internal updates. Event Control is a mechanism that is used to synchronize the camera with host application using these events.

EventSelector can be used to select the event for turning notifications on or off. By default, EventNotification for all the events are set to Off except for Test event. This is because EventNotification for Test event is always set to On and cannot be changed.

The following pseudocode demonstrates enabling Event Control on the camera.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

// Connect to camera

// Get device node map

InitializeEvents();

RegisterCallback();

GenerateEvent();

// Wait on event

DeregisterCallback();

DeinitializeEvents();

|

Events available on ATP200S are as follow:

Exposure Start#

Exposure Start event occurs when camera starts exposing the sensor to capture a frame. Following data is sent by the camera at Exposure Start event:

- EventExposureStart: Unique identifier of the Exposure Start type of Event.

- EventExposureStartTimestamp: Unique timestamp of the Exposure Start Event.

- EventExposureStartFrameID: Unique Frame ID related to Exposure Start Event.

Exposure End#

Exposure End event occurs when camera has finished exposing the sensor. Following data is sent by the camera at Exposure End event:

- EventExposureEnd: Unique identifier of the Exposure End type of Event.

- EventExposureEndTimestamp: Unique timestamp of the Exposure End Event.

- EventExposureEndFrameID: Unique Frame ID related to Exposure End Event.

Test#

Test event occurs when TestEventGenerate node is executed. Test event is mainly used to confirm that camera is generating events. Following data is sent by the camera at Test event:

- EventTest: Unique identifier of the Test type of Event.

- EventTestTimestamp: Unique timestamp of the Test Event.

Counter and Timer Control#

Counter#

Counters can be used to keep a running total of an internal event. The Counter value can be reset, read, or written at any time.

The following nodes are available to configure the Counter increment:

- CounterSelector: The Counter control to be used

- CounterEventSource: The event source that will increment the Counter

- CounterEventActiviation: The activation mode used for CounterEventSource (e.x. RisingEdge)

- CounterTriggerSource: The source that will start the Counter

The following nodes are available to configure the Counter reset:

- CounterResetSource: The signal that will reset the Counter

- CounterResetActivation: The signal used to activate CounterResetSource

- CounterReset: The command will reset CounterValue and set the last value to CounterValueAtReset

The following nodes are available to read or control the Counter:

- CounterValue: The current value of the Counter

- CounterValueAtReset: The value of the Counter before it was reset

- CounterDuration: The value to count before setting CounterStatus = CounterCompleted

- CounterStatus: The status of the Counter

The following CounterStatus modes are available:

- CounterIdle: Counter control is not enabled

- CounterTriggerWait: Counter is waiting to be activated

- CounterActive: Counter is counting until the value specified in CounterDuration

- CounterCompleted: Counter has incremented to value set in CounterDuration

- CounterOverflow: CounterValue has reached its maximum possible value

When CounterValue = CounterDuration, the counter stops counting until a CounterReset or a new CounterTriggerSource occurs.

Changing CounterDuration will issue a CounterReset to CounterValue.

Use Counter to Count Rising Edges#

The following pseudocode demonstrates using a Rising Edge counter event to track the number of rising edges signals received on Line 0. A second counter is enabled to track the number of Exposure Start events that occurred to indicate the number of images captured.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | // Connect to camera

// Get device node map

// Use Counter0 to count rising edges received on Line0

CounterSelector = Counter0;

CounterEventSource = Line0;

CounterEventActivation = RisingEdge;

CounterTriggerSource = AcquisitionStart; // Start this counter at the camera's AcquisitionStart

CounterDuration = 1;

CounterValue = 1; // Start at CounterValue = CounterDuration (e.x. increment the counter until we reset it)

// Use Counter1 to count ExposureStart events occurring on camera

CounterSelector = Counter1;

CounterEventSource = ExposureStart;

CounterEventActivation = RisingEdge;

CounterTriggerSource = AcquisitionStart; // Start this counter at the camera's AcquisitionStart

CounterDuration = 1;

CounterValue = 1; // Start at CounterValue = CounterDuration (e.x. increment the counter until we reset it)

// Enable Rising Edge trigger on Line0

TriggerSelector = FrameStart;

TriggerSource = Line0;

TriggerActivation = RisingEdge;

TriggerMode = On;

AcquisitionStart();

// Acquire images by sending pulses to Line0

AcquisitionStop();

// To read rising edge pulses received:

// CounterSelector = Counter0

// Read CounterValue

// To read images captured:

// CounterSelector = Counter1

// Read CounterValue

// CounterValue for Counter0 should equal CounterValue for Counter1. If not we missed a trigger signal.

|

Timer#

Timers can be used to measure the duration of internal or external signals.

The following nodes are available to configure the Timer:

- TimerSelector: The Timer control to be used

- TimerTriggerSource: The source that will trigger the Timer

- TimerTriggerActivation: The activation mode used for TimerTriggerSource (e.x. RisingEdge)

- TimerDuration: The duration of the Timer pulse in microseconds

- TimerDelay: The duration of delay to apply before starting the Timer upon receipt of a TimerTriggerActivation

- TimerReset: Resets the Timer control

- TimerValue: Reads or writes the current value of the Timer

- TimerStatus: The status of the Timer

The following TimerStatus modes are available:

- TimerIdle: Timer control is not enabled

- TimerTriggerWait: Timer is waiting to be activated

- TimerActive: Timer is counting for the specified duration in TimerDuration

- TimerCompleted: Timer has reached the duration specified in TimerDuration

When TimerStatus = TimerCompleted, the Timer signal becomes active low until a TimerReset or a new TimerTriggerSource occurs.

Use Timer to Delay a Line Output Pulse#

The following pseudocode demonstrates using a Timer to delay a Line output pulse relative to the ExposureStart event of the image.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | // Connect to camera

// Get device node map

// Set up Line2 for Timer pulse output:

LineSelector = Line2;

LineMode = Output;

LineSource = Timer0Active; // The Line output will be the duration of the Timer

// Use Timer0 to configure your Line2 pulse:

TimerSelector = Timer0;

TimerTriggerSource = ExposureStart;

TimerTriggerActivation = RisingEdge;

TimerDelay = 2000; // The Line output delay will be 2000 microseconds from the ExposureStart event

TimerDuration = 8000; // The Line output duration will be 8000 microseconds

AcquisitionStart();

// Acquire images

// Monitor Line2 output

|

Use Timer to Output a Custom Pulse Width#

The following pseudocode demonstrates using a Timer output a pulse of a custom width. This can be used for a single pulse width or pulse width modulation.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | // Connect to camera

// Get device node map

// Set up Line2 for Timer pulse output:

LineSelector = Line2;

LineMode = Output;

LineSource = Timer0Active; // The Line output will be the duration of the Timer

// Use Timer0 to configure your Line2 pulse:

TimerSelector = Timer0;

TimerTriggerSource = SoftwareSignal0;

TimerTriggerActivation = RisingEdge;

TimerDelay = 0;

TimerDuration = 2000; // The Line output duration will be 2000 microseconds

// Use SoftwareSignal0 to trigger the Timer pulse

SoftwareSignalSelector = SoftwareSignal0;

SoftwareSignalPulse();

|

Firmware Update#

Use the camera’s Firmware Update page to update your camera’s firmware. Updating your camera’s firmware is easy with our firmware fwa file. The firmware fwa files for each camera are located on our Downloads page.

Every Lucid camera comes with an on-board firmware update page that can be directly accessed in a web browser. If you already know your camera’s IP address you can access it at http://{your-camera-ip-address}/firmware-update.html.

Updating your Camera Firmware#

- Navigate to your camera’s firmware update page in your web browser (http://{your-camera-ip-address}/firmware-update.html).

- Click Choose a file on the Firmware Update page and select your fwa file.

- Click Submit on the Firmware Update page. This will start the firmware update process.

The Firmware Update page will show the progress of the update. During the update, the camera will not be accessible for control or image capture. The update may take a few minutes to complete. Please refrain from removing power from the camera during the update.

When the firmware update is complete, the camera will reboot. Once the update is finished, you can close the Firmware Update page and access the camera again.

Device Nodes#

Acquisition Control#

Acquisition Control contains features related to image acquisition. Triggering and exposure control functionalities are included in this section.

Acquisition Modes#

There are 3 main types of acquisition modes – SingleFrame acquisition, MultiFrame acquisition, and Continuous acquisition.

SingleFrame Acquisition#

Under SingleFrame acquisition mode, one frame is acquired after AcquisitionStart is called. AcquisitionStop is an optional call as the acquisition process automatically stops after the single frame is acquired. During the acquisition process, all Transport Layer parameters are locked and cannot be modified.

Note that if “Acquisition Stop” is executed after “Acquisition Start” but prior to a frame is available, it is possible that no frame is acquired.

The following code block demonstrates configuring camera to single frame acquisition mode.

1 2 3 |

// Connect to camera

// Get device node map

AcquisitionMode = SingleFrame;

|

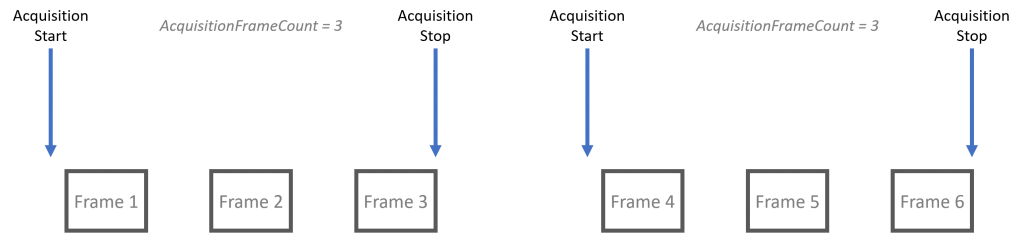

MultiFrame Acquisition#

Under MultiFrame acquisition mode, frames are acquired once AcquisitionStart is called. The number of frames acquired is dictated by the parameter AcquisitionFrameCount. During the acquisition process, all Transport Layer parameters are locked and cannot be modified.

Note that AcquisitionStop is optional under this acquisition mode.

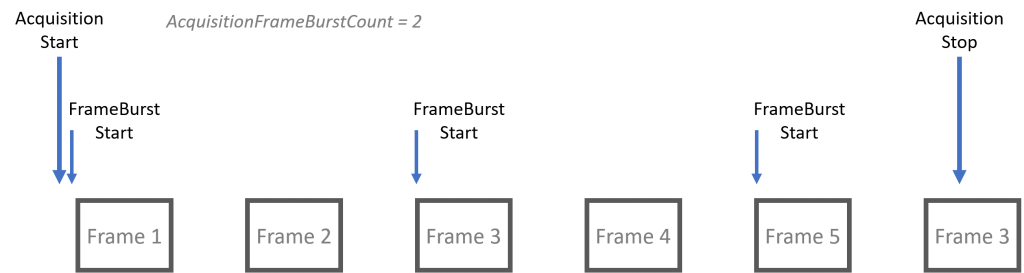

MultiFrame with FrameBurstStart#

A burst of frames is defined as a capture of a group of one or many frames within an acquisition. This can be achieved with MultiFrame acquisition mode as demonstrated in the diagram below. Note in the diagram, the second FrameBurstStart in each acquisition sequence only results in 1 frame acquired since AcquisitionFrameCount is set to a value of 3.

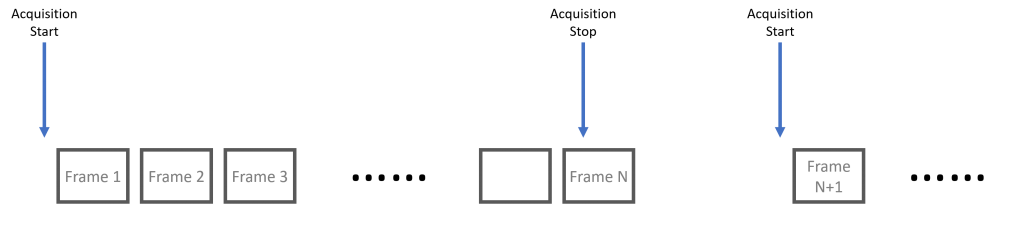

Continuous Acquisition#

Under Continuous acquisition mode, frames are acquired once AcquisitionStart is called. Frames are acquired until AcquisitionStop is called. During the acquisition process, all Transport Layer parameters are locked and cannot be modified.

Note that if AcquisitionStop is called during the last frame then the acquisition sequence will stop after the current frame finishes.

1 2 3 |

// Connect to camera

// Get device node map

AcquisitionMode = Continuous;

|

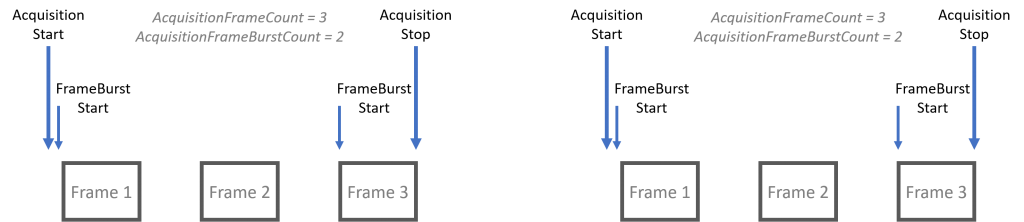

Continuous Acquisition with FrameBurstStart#

A burst of frames is defined as a capture of a group of one or many frames within an acquisition. This can be achieved with Continuous acquisition mode as below. Note that when AcquisitionStop is called, the current frame will need to be finished.

| Node Name | Description |

|---|---|

| AcquisitionMode | Specifies the acquisition mode of the current device. It helps determine the number of frames to acquire during each acquisition sequence. |

| AcquisitionStart | Start the acquisition sequence for the current device. |

| AcquisitionStop | Stop the acquisition sequence for the current device. |

| AcquisitionFrameCount | This node specifies the number of frames to be acquired under MultiFrame AcquisitionMode. |

| AcquisitionBurstFrameCount | This parameter is ignored if AcquisitionMode is set to SingleFrame. This feature is also constrained by AcquisitionFrameCount if AcquisitionMode is MultiFrame. |

| AcquisitionFrameRate | Controls the acquisition rate (in Hertz) at which the frames are captured. |

| TransmissionFrameRate | Specifies the rate (in Hertz )at which frames are transmitted. |

| AcquisitionFrameRateEnable | Controls if the AcquisitionFrameRate feature is writable and used to control the acquisition rate. |

| AcquisitionLineRate | Controls the rate (in Hertz) at which the Lines in a Frame are captured. |

| TriggerSelector | This node selects the specific trigger type to configure. |

| TriggerMode | Controls the On/Off status of the current trigger. |

| TriggerSoftware | Executing this will generate a software trigger signal. Note that current TriggerSource must be set to Software. |

| TriggerSource | This node specifies the source of the trigger. It can be a software internal signal of a physical input hardware signal. |

| TriggerActivation | This node specifies the state in which trigger is activated. |

| TriggerOverlap | Specifies the type of trigger overlap permitted with the previous frame or line. This defines when a valid trigger will be accepted (or latched) for a new frame or a new line. |

| TriggerLatency | Enables low latency trigger mode. |

| TriggerDelay | Specifies the delay in microseconds (us) to apply after the trigger reception before activating it. |

| TriggerArmed | Specifies whether the trigger is armed. If the trigger is not armed, triggers will be ignored. |

| ExposureTime | Controls the device exposure time in microseconds (us). |

| ExposureTimeRaw | Reports the device raw exposure time value. |

| ShortExposureEnable | Sets to Short Exposure Mode. |

| ExposureAuto | Sets the automatic exposure mode. |

| ExposureAutoLimitAuto | Specifies usage of ExposureAutoLowerLimit and ExposureAutoUpperLimit. |

| ExposureAutoLowerLimit | Specifies the lower limit of ExposureAuto algorithm. |

| ExposureAutoUpperLimit | Specifies the upper limit of ExposureAuto algorithm. |

| TargetBrightness | Sets the target brightness in 8-bit. |

| ExposureAutoAlgorithm | Controls the auto exposure algorithm. |

| ExposureAutoDamping | Controls the auto exposure damping factor in percent. Bigger values converge faster but have higher chance of oscillating. |

| ExposureAutoDampingRaw | Controls the auto exposure damping factor raw value. Bigger values converge faster but have higher chance of oscillating. |

| CalculatedMedian | Reports the current image exposure median value. |

| CalculatedMean | Reports the current image exposure average value. |

| AutoExposureAOI | Category for auto exposure AOI features. |

Auto Exposure AOI#

| Node Name | Description |

|---|---|

| AutoExposureAOIEnable | Controls auto exposure AOI enable (1) or disable (0). |

| AutoExposureAOIWidth | Controls auto exposure AOI width relative to user AOI. |

| AutoExposureAOIHeight | Controls auto exposure AOI height relative to user AOI. |

| AutoExposureAOIOffsetX | Controls auto exposure AOI offset X relative to user AOI. |

| AutoExposureAOIOffsetY | Controls auto exposure AOI offset Y relative to user AOI. |

Action Control#

| Node Name | Description |

|---|---|

| ActionUnconditionalMode | Enables the unconditional action command mode where action commands are processed even when the primary control channel is closed. |

| ActionQueueSize | Indicates the size of the scheduled action commands queue. |

| ActionDeviceKey | Provides the device key that allows the device to check the validity of action commands. |

| ActionSelector | Selects to which Action Signal further Action settings apply. |

| ActionGroupKey | Provides the key that the device will use to validate the action on reception of the action protocol message. |

| ActionGroupMask | Provides the mask that the device will use to validate the action on reception of the action protocol message. |

Chunk Data Control#

| Node Name | Description |

|---|---|

| ChunkModeActive | Activates the inclusion of Chunk data in the payload of the image. |

| ChunkSelector | Selects which Chunk to enable or control. |

| ChunkEnable | Enables the inclusion of the selected Chunk data in the payload of the image. |

| ChunkCRC | CRC chunk data. |

| ChunkLineStatusAll | Chunk Line Status All chunk data. |

| ChunkSequencerSetActive | Chunk Sequencer Set Active chunk data. |

| ChunkPixelFormat | Chunk Pixel Format chunk data. |

| ChunkWidth | Chunk Width chunk data. |

| ChunkHeight | Chunk Height chunk data. |

| ChunkOffsetX | Chunk Offset X chunk data. |

| ChunkOffsetY | Chunk Offset Y chunk data. |

| ChunkPixelDynamicRangeMin | Chunk Pixel Dynamic Range Min chunk data. |

| ChunkPixelDynamicRangeMax | Chunk Pixel Dynamic Range Max chunk data. |

| ChunkGain | Chunk Gain chunk data. |

| ChunkBlackLevel | Chunk Black Level chunk data. |

| ChunkExposureTime | Chunk Exposure Time chunk data. |

Color Transformation Control#

Color Transformation Control contains features that describe how to color transformation features of an image, such as RGB to YUV conversion. This feature is not available on monochrome cameras.

| Node Name | Description |

|---|---|

| ColorTransformationEnable | Controls if the selected color transformation module is activated. |

| ColorTransformationSelector | Selects which Color Transformation module is controlled by the various Color Transformation features. |

| ColorTransformationValueSelector | Selects the Gain factor or Offset of the Transformation matrix to access in the selected Color Transformation module. |

| ColorTransformationValue | Represents the value of the selected Gain factor or Offset inside the Transformation matrix. |

Counter and Timer Control#

| Node Name | Description |

|---|---|

| CounterSelector | Selects which Counter to configure. |

| CounterEventSource | Select the events that will be the source to increment the Counter. |

| CounterEventActivation | Selects the Activation mode Event Source signal. |

| CounterTriggerSource | Selects the source to start the Counter. |

| CounterTriggerActivation | Selects the Activation mode of the trigger to start the Counter. |

| CounterResetSource | Select the signals that will be the source to reset the Counter. |

| CounterResetActivation | Selects the Activation mode of the Counter Reset Source signal. |

| CounterReset | Does a software reset of the selected Counter and starts it. |

| CounterValue | Reads or writes the current value of the selected Counter. |

| CounterValueAtReset | Reads value of the selected Counter when it was reset by a trigger or by an explicit CounterReset command. |

| CounterDuration | Sets the duration (or number of events) before the Counter End event is generated. |

| CounterStatus | Returns the current status of the Counter. |

| TimerSelector | Selects which Timer to configure. |

| TimerTriggerSource | Selects the source to start the Timer. |

| TimerTriggerActivation | Selects the Activation mode of the trigger to start the Timer. |

| TimerDuration | Sets the duration (in microseconds) of the Timer pulse. |

| TimerDelay | Sets the duration (in microseconds) of the delay to apply at the reception of a trigger before stating the Timer. |

| TimerReset | Does a software reset of the selected Timer and starts it. |

| TimerValue | Reads or writes the current value of the selected Timer. |

| TimerStatus | Returns the current status of the Timer. |

Defect Correction Control#

| Node Name | Description |

|---|---|

| DefectCorrectionEnable | Actives the defect correction feature. |

| DefectCorrectionCount | The number of defect corrected pixels. |

| DefectCorrectionIndex | The index of the defect corrected pixel to access. |

| DefectCorrectionPositionX | The column index of the defect corrected pixel selected by DefectCorrectionIndex. |

| DefectCorrectionPositionY | The row index of the defect corrected pixel selected by DefectCorrectionIndex. |

| DefectCorrectionGetNewDefect | Get a new defect corrected pixel to be entered. |

| DefectCorrectionApply | Apply the new defect corrected pixel. |

| DefectCorrectionRemove | Remove the defect corrected pixel selected by DefectCorrectionIndex. |

| DefectCorrectionSave | Save the defect corrected pixels to non-volatile memory. |

| DefectCorrectionRestoreDefault | Reset the defect correction to factory default. |

Device Control#

Device Control contains features that provide information of the capabilities of the device. There are also features that describe the particular device in detail. These features can be used in applications to query the capabilities of the device and report them to the end user if needed.

Below is a list of the available features that are contained in the specific category.

| Node Name | Description |

|---|---|

| DeviceType | Returns the device type. |

| DeviceScanType | Scan type of the sensor of the device. |

| DeviceVendorName | Name of the manufacturer of the device. |

| DeviceModelName | Model of the device. |

| DeviceFamilyName | Identifier of the product family of the device. |

| DeviceManufacturerInfo | Provides additional information from the vendor about the device. |

| DeviceVersion | Version of the device. |

| DeviceFirmwareVersion | Version of the firmware in the device. |

| DeviceSerialNumber | Device’s unique serial number. |

| DeviceUserID | A device ID string that is user-programmable. |

| DeviceSFNCVersionMajor | Major version of the Standard Features Naming Convention (SFNC) that was used to create the device’s GenICam XML. |

| DeviceSFNCVersionMinor | Minor version of the Standard Features Naming Convention (SFNC) that was used to create the device’s GenICam XML. |

| DeviceSFNCVersionSubMinor | Sub minor version of the Standard Features Naming Convention (SFNC) that was used to create the device’s GenICam XML. |

| DeviceManifestEntrySelector | Manifest entry selector. |

| DeviceManifestXMLMajorVersion | Indicates the major version number of the GenICam XML file of the selected manifest entry. |

| DeviceManifestXMLMinorVersion | Indicates the minor version number of the GenICam XML file of the selected manifest entry. |

| DeviceManifestXMLSubMinorVersion | Indicates the sub minor version number of the GenICam XML file of the selected manifest entry. |

| DeviceManifestSchemaMajorVersion | Indicates the major version number of the schema file of the selected manifest entry. |

| DeviceManifestSchemaMinorVersion | Indicates the minor version number of the schema file of the selected manifest entry. |

| DeviceManifestPrimaryURL | Indicates the first URL to the GenICam XML device description file of the selected manifest entry. |

| DeviceManifestSecondaryURL | Indicates the second URL to the GenICam XML device description file of the selected manifest entry. |

| DeviceTLType | Transport Layer type of the device. |

| DeviceTLVersionMajor | Major version of the Transport Layer of the device. |

| DeviceTLVersionMinor | Minor version of the Transport Layer of the device. |

| DeviceTLVersionSubMinor | Sub minor version of the Transport Layer of the device. |

| DeviceMaxThroughput | Maximum bandwidth of the data that can be streamed out of the device. |

| DeviceLinkSelector | Selects which Link of the device to control. In general, the device only has one link. |

| DeviceLinkSpeed | Indicates the speed of transmission negotiated on the specified Link selected by DeviceLinkSelector. |

| DeviceLinkThroughputLimitMode | Controls if the DeviceLinkThroughputLimit is active. |

| DeviceLinkThroughputLimit | Limits the maximum bandwidth of the data that will be streamed out by the device on the selected Link. |

| DeviceLinkThroughputReserve | Allocates the maximum percentage of bandwidth reserved for re-transmissions. |

| DeviceLinkHeartbeatMode | Activate or deactivate the selected Link’s heartbeat. |

| DeviceLinkHeartbeatTimeout | Controls the current heartbeat timeout of the specific Link in microseconds. |

| DeviceLinkCommandTimeout | Indicates the command timeout of the specified Link in microseconds. |

| DeviceStreamChannelCount | Indicates the number of stream channels supported by the device. |

| DeviceStreamChannelSelector | Selects the stream channel to control. |

| DeviceStreamChannelType | Reports the type of the stream channel. |

| DeviceStreamChannelEndianness | Endianness of multi-byte pixel data for this stream. |

| DeviceStreamChannelPacketSize | Specifies the stream packet size, in bytes, to send on the selected channel for the device. |

| DeviceEventChannelCount | Indicates the number of event channels supported by the device. |

| DeviceCharacterSet | Character set used by all the strings of the device. |

| DeviceReset | Resets the device to its power up state. |

| DeviceFactoryReset | Resets device to factory defaults. |

| FirmwareUpdate | Starts a firmware update. |

| DeviceFeaturePersistenceStart | Indicate to the device and GenICam XML to get ready for persisting of all streamable features. |

| DeviceFeaturePersistenceEnd | Indicate to the device the end of feature persistence. |

| DeviceRegistersStreamingStart | Prepare the device for registers streaming without checking for consistency. |

| DeviceRegistersStreamingEnd | Announce the end of registers streaming. |

| DeviceIndicatorMode | Controls the behavior of the indicator LED showing the status of the Device. |

| DeviceTemperatureSelector | Selects the temperature sensor to read from. |

| DeviceTemperature | Device temperature in degrees Celsius. |

| DevicePressure | The internal device pressure in kilopascals. |

| DevicePower | Device power in Watts. |

| DeviceClockSelector | Selects the clock frequency to access from the device. |

| DeviceClockFrequency | Returns the frequency of the selected Clock. |

| Timestamp | Reports the current value of the device timestamp counter. |

| TimestampReset | Resets the current value of the device timestamp counter. Executing this command causes the timestamp counter to restart automatically. |

| TimestampLatch | Latches the current timestamp counter into TimestampLatchValue. |

| TimestampLatchValue | Returns the latched value of the timestamp counter. |

| DeviceUpTime | Time the device has been powered in seconds. |

| LinkUpTime | Time the device link has been established in seconds. |

Digital IO Control#

| Node Name | Description |